This post describes how to deploy cilium (and

hubble) using docker on a Linux system with

k3d or kind to test it as

CNI and

Service Mesh.

I wrote some scripts to do a local installation and evaluate cilium to use it

at work (in fact we are using cilium on an EKS

cluster now), but I thought it would be a good idea to share my original

scripts in this blog just in case they are useful to somebody, at least for

playing a little with the technology.

Links

As there is no point on explaining here all the concepts related to cilium

I’m providing some links for the reader interested on reading about it:

- What is CNI?

- What is Cilium?

- What is eBPF?

- What is Hubble?

- Why use Cilium with Kubernetes?

All the scripts and configuration files discussed in this post are available on my cilium-docker git repository.

Installation

For each platform we are going to deploy two clusters on the same docker network; I’ve chosen this model because it allows the containers to see the addresses managed by metallb from both clusters (the idea is to use those addresses for load balancers and treat them as if they were public).

The installation(s) use cilium as CNI, metallb for BGP (I tested the

cilium options, but I wasn’t able to configure them right) and nginx as the

ingress controller (again, I tried to use cilium but something didn’t work

either).

To be able to use the previous components some default options have been

disabled on k3d and kind and, in the case of k3d, a lot of k3s options

(traefik, servicelb, kubeproxy, network-policy, …) have also been

disabled to avoid conflicts.

To use the scripts we need to install cilium, docker, helm, hubble,

k3d, kind, kubectl and tmpl in our system.

After cloning the repository, the sbin/tools.sh

script can be used to do that on a linux-amd64 system:

$ git clone https://forgejo.mixinet.net/blogops/cilium-docker.git

$ cd cilium-docker

$ ./sbin/tools.sh appsOnce we have the tools, to install everything on k3d (for kind replace

k3d by kind) we can use the

sbin/cilium-install.sh script as

follows:

$ # Deploy first k3d cluster with cilium & cluster-mesh

$ ./sbin/cilium-install.sh k3d 1 full

[...]

$ # Deploy second k3d cluster with cilium & cluster-mesh

$ ./sbin/cilium-install.sh k3d 2 full

[...]

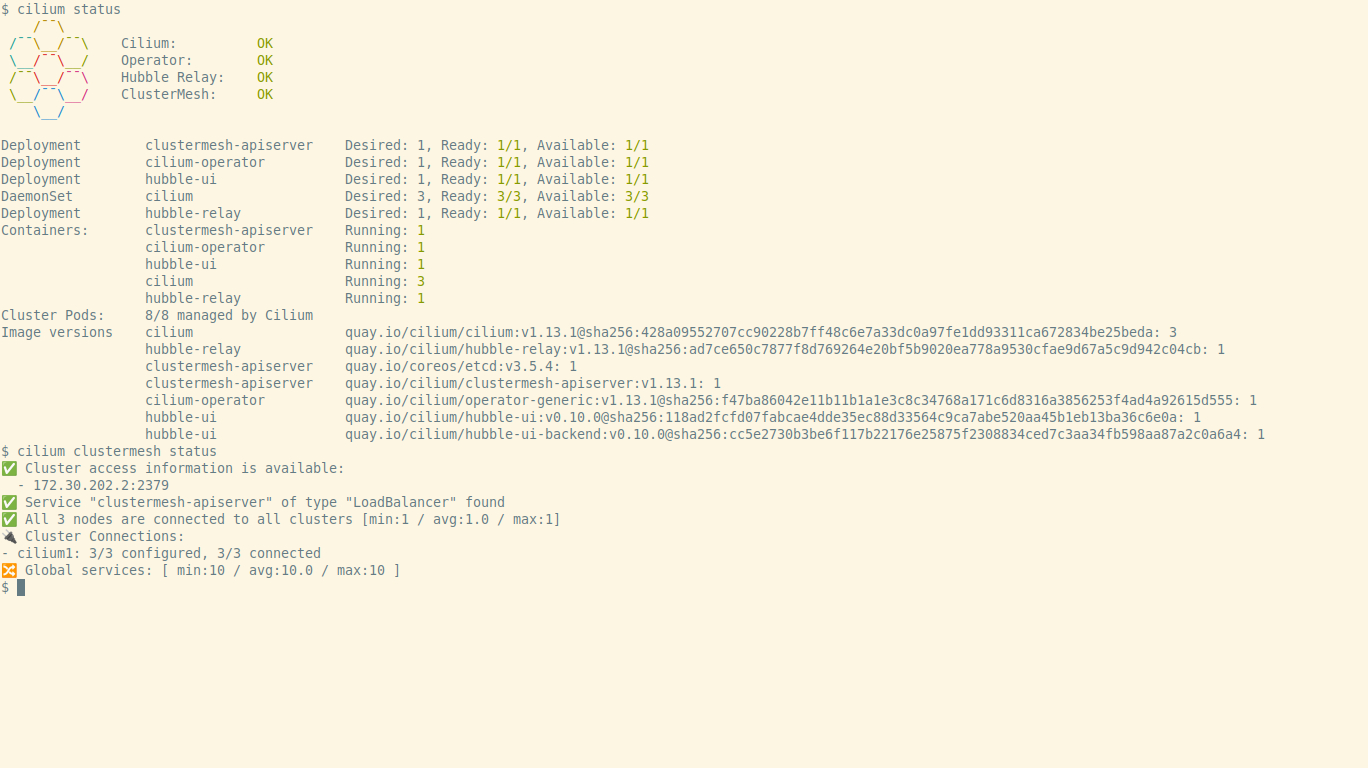

$ # The 2nd cluster-mesh installation connects the clustersIf we run the command cilium status after the installation we should get an

output similar to the one seen on the following screenshot:

The installation script uses the following templates:

tmpl/k3d-config.yaml: configuration to deploy thek3dcluster.tmpl/kind-config.yaml: configuration to deploy thekindcluster.tmpl/metallb-crds.yamlandtmpl/ippols.yaml: configurations for themetallbdeployment.tmpl/cilium.yaml: values to deploy the cilium using the helm chart.

Once we have finished our tests we can remove the installation using the

sbin/cilium-remove.sh script.

Some notes about the configuration

As noted on the documentation, the

ciliumdeployment needs to mount thebpffson/sys/fs/bpfandcgroupv2on/run/cilium/cgroupv2; that is done automatically onkind, but fails onk3dbecause the image does not includebash(see this issue).To fix it we mount a script on all the

k3dcontainers that is executed each time they are started (the script is mounted as/bin/k3d-entrypoint-cilium.shbecause the/bin/k3d-entrypoint.shscript executes the scripts that follow the pattern/bin/k3d-entrypoint-*.shbefore launching thek3sdaemon).The source code of the script is available here.

When testing the multi-cluster deployment with

k3dwe have found issues with open files, looks like they are related toinotify(see this page on the kind documentation); adding the following to the/etc/sysctl.conffile fixed the issue:# fix inotify issues with docker & k3d fs.inotify.max_user_watches = 524288 fs.inotify.max_user_instances = 512- Although the deployment theoretically supports it, we are not using

ciliumas the clusteringressyet (it did not work, so it is no longer enabled) and we are also ignoring thegateway-apifor now. The documentation uses the

ciliumcli to do all the installations, but I noticed that following that route the current version does not work right withhubble(it messes up the TLS support, there are some notes about the problems on this cilium issue), so we are deploying withhelmright now.The problem with the

helmapproach is that there is no official documentation on how to install thecluster meshwith it (there is a request for documentation here), so we are using theciliumcli for now and it looks that it does not break thehubbleconfiguration.

Tests

To test cilium we have used some scripts & additional config files that are

available on the test sub directory of the repository:

cilium-connectivity.sh: a script that runs thecilium connectivitytest for one cluster or in multi cluster mode (for mesh testing).If we export the variable

HUBBLE_PF=truethe script executes the commandcilium hubble port-forwardbefore launching the tests.http-sw.sh: Simple tests for cilium policies from the cilium demo; the script deploys the Star Wars demo application and allows us to add the L3/L4 policy or the L3/L4/L7 policy, test the connectivity and view the policies.ingress-basic.sh: This test is for checking the ingress controller, it is prepared to work againstciliumandnginx, but as explained before the use ofciliumas an ingress controller is not working as expected, so the idea is to call it withnginxalways as the first argument for now.mesh-test.sh: Tool to deploy a global service on two clusters, change the service affinity tolocalorremote, enable or disable if the service is shared and test how the tools respond.

Running the tests

The cilium-connectivity.sh executes the standard cilium tests:

$ ./test/cilium-connectivity.sh k3d 12

ℹ️ Monitor aggregation detected, will skip some flow validation

steps

✨ [k3d-cilium1] Creating namespace cilium-test for connectivity

check...

✨ [k3d-cilium2] Creating namespace cilium-test for connectivity

check...

[...]

✅ All 33 tests (248 actions) successful, 2 tests skipped,

0 scenarios skipped.To test how the cilium policies work use the http-sw.sh script:

kubectx k3d-cilium2 # (just in case)

# Create test namespace and services

./test/http-sw.sh create

# Test without policies (exaust-port fails by design)

./test/http-sw.sh test

# Create and view L3/L4 CiliumNetworkPolicy

./test/http-sw.sh policy-l34

# Test policy (no access from xwing, exaust-port fails)

./test/http-sw.sh test

# Create and view L7 CiliumNetworkPolicy

./test/http-sw.sh policy-l7

# Test policy (no access from xwing, exaust-port returns 403)

./test/http-sw.sh test

# Delete http-sw test

./test/http-sw.sh deleteAnd to see how the service mesh works use the mesh-test.sh script:

# Create services on both clusters and test

./test/mesh-test.sh k3d create

./test/mesh-test.sh k3d test

# Disable service sharing from cluster 1 and test

./test/mesh-test.sh k3d svc-shared-false

./test/mesh-test.sh k3d test

# Restore sharing, set local affinity and test

./test/mesh-test.sh k3d svc-shared-default

./test/mesh-test.sh k3d svc-affinity-local

./test/mesh-test.sh k3d test

# Delete deployment from cluster 1 and test

./test/mesh-test.sh k3d delete-deployment

./test/mesh-test.sh k3d test

# Delete test

./test/mesh-test.sh k3d delete