For a long time I’ve been wanting to try GitOps tools, but I haven’t had the chance to try them for real on the projects I was working on.

As now I have some spare time I’ve decided I’m going to play a little with Argo CD, Flux and Kluctl to test them and be able to use one of them in a real project in the future if it looks appropriate.

On this post I will use Argo-CD Autopilot to install argocd on a

k3d local cluster installed using OpenTofu to test the autopilot approach of

managing argocd and test the tool (as it manages argocd using a git repository it can be used to test argocd as

well).

Installing tools locally with arkade

Recently I’ve been using the arkade tool to install kubernetes related

applications on Linux servers and containers, I usually get the applications with it and install them on the

/usr/local/bin folder.

For this post I’ve created a simple script that checks if the tools I’ll be using are available and installs them on the

$HOME/.arkade/bin folder if missing (I’m assuming that docker is already available, as it is not installable with

arkade):

#!/bin/sh

# TOOLS LIST

ARKADE_APPS="argocd argocd-autopilot k3d kubectl sops tofu"

# Add the arkade binary directory to the path if missing

case ":${PATH}:" in

*:"${HOME}/.arkade/bin":*) ;;

*) export PATH="${PATH}:${HOME}/.arkade/bin" ;;

esac

# Install or update arkade

if command -v arkade >/dev/null; then

echo "Trying to update the arkade application"

sudo arkade update

else

echo "Installing the arkade application"

curl -sLS https://get.arkade.dev | sudo sh

fi

echo ""

echo "Installing tools with arkade"

echo ""

for app in $ARKADE_APPS; do

app_path="$(command -v $app)" || true

if [ "$app_path" ]; then

echo "The application '$app' already available on '$app_path'"

else

arkade get "$app"

fi

done

cat <<EOF

Add the ~/.arkade/bin directory to your PATH if tools have been installed there

EOFThe rest of scripts will add the binary directory to the PATH if missing to make sure things work if something was installed there.

Creating a k3d cluster with opentofu

Although using k3d directly will be a good choice for the creation of the cluster, I’m using tofu to do it because

that will probably be the tool used to do it if we were working with Cloud Platforms like AWS or Google.

The main.tf file is as follows:

terraform {

required_providers {

k3d = {

source = "moio/k3d"

version = "0.0.12"

}

sops = {

source = "carlpett/sops"

version = "1.2.0"

}

}

}

data "sops_file" "secrets" {

source_file = "secrets.yaml"

}

resource "k3d_cluster" "argocd_cluster" {

name = "argocd"

servers = 1

agents = 2

image = "rancher/k3s:v1.31.5-k3s1"

network = "argocd"

token = data.sops_file.secrets.data["token"]

port {

host_port = 8443

container_port = 443

node_filters = [

"loadbalancer",

]

}

k3d {

disable_load_balancer = false

disable_image_volume = false

}

kubeconfig {

update_default_kubeconfig = true

switch_current_context = true

}

runtime {

gpu_request = "all"

}

}The k3d configuration is quite simple, as I plan to use the default traefik ingress controller with TLS I publish

the 443 port on the hosts 8443 port, I’ll explain how I add a valid certificate on the next step.

I’ve prepared the following script to initialize and apply the changes:

#!/bin/sh

set -e

# VARIABLES

# Default token for the argocd cluster

K3D_CLUSTER_TOKEN="argocdToken"

# Relative PATH to install the k3d cluster using terr-iaform

K3D_TF_RELPATH="k3d-tf"

# Secrets yaml file

SECRETS_YAML="secrets.yaml"

# Relative PATH to the workdir from the script directory

WORK_DIR_RELPATH=".."

# Compute WORKDIR

SCRIPT="$(readlink -f "$0")"

SCRIPT_DIR="$(dirname "$SCRIPT")"

WORK_DIR="$(readlink -f "$SCRIPT_DIR/$WORK_DIR_RELPATH")"

# Update the PATH to add the arkade bin directory

# Add the arkade binary directory to the path if missing

case ":${PATH}:" in

*:"${HOME}/.arkade/bin":*) ;;

*) export PATH="${PATH}:${HOME}/.arkade/bin" ;;

esac

# Go to the k3d-tf dir

cd "$WORK_DIR/$K3D_TF_RELPATH" || exit 1

# Create secrets.yaml file and encode it with sops if missing

if [ ! -f "$SECRETS_YAML" ]; then

echo "token: $K3D_CLUSTER_TOKEN" >"$SECRETS_YAML"

sops encrypt -i "$SECRETS_YAML"

fi

# Initialize terraform

tofu init

# Apply the configuration

tofu applyAdding a wildcard certificate to the k3d ingress

As an optional step, after creating the k3d cluster I’m going to add a default wildcard certificate for the traefik

ingress server to be able to use everything with HTTPS without certificate issues.

As I manage my own DNS domain I’ve created the lo.mixinet.net and *.lo.mixinet.net DNS entries on my public and

private DNS servers (both return 127.0.0.1 and ::1) and I’ve created a TLS certificate for both entries using

Let’s Encrypt with Certbot.

The certificate is updated automatically on one of my servers and when I need it I copy the contents of the

fullchain.pem and privkey.pem files from the /etc/letsencrypt/live/lo.mixinet.net server directory to the local

files lo.mixinet.net.crt and lo.mixinet.net.key.

After copying the files I run the following file to install or update the certificate and configure it as the default

for traefik:

#!/bin/sh

# Script to update the

secret="lo-mixinet-net-ingress-cert"

cert="${1:-lo.mixinet.net.crt}"

key="${2:-lo.mixinet.net.key}"

if [ -f "$cert" ] && [ -f "$key" ]; then

kubectl -n kube-system create secret tls $secret \

--key=$key \

--cert=$cert \

--dry-run=client --save-config -o yaml | kubectl apply -f -

kubectl apply -f - << EOF

apiVersion: traefik.containo.us/v1alpha1

kind: TLSStore

metadata:

name: default

namespace: kube-system

spec:

defaultCertificate:

secretName: $secret

EOF

else

cat <<EOF

To add or update the traefik TLS certificate the following files are needed:

- cert: '$cert'

- key: '$key'

Note: you can pass the paths as arguments to this script.

EOF

fiOnce it is installed if I connect to https://foo.lo.mixinet.net:8443/ I get a 404 but the certificate is valid.

Installing argocd with argocd-autopilot

Creating a repository and a token for autopilot

I’ll be using a project on my forgejo instance to manage argocd, the repository I’ve created is on the URL

https://forgejo.mixinet.net/blogops/argocd and I’ve created a private user named argocd that only has write access to

that repository.

Logging as the argocd user on forgejo I’ve created a token with permission to read and write repositories that I’ve

saved on my pass password store on the mixinet.net/argocd@forgejo/repository-write

entry.

Bootstrapping the installation

To bootstrap the installation I’ve used the following script (it uses the previous GIT_REPO and GIT_TOKEN values):

#!/bin/sh

set -e

# VARIABLES

# Relative PATH to the workdir from the script directory

WORK_DIR_RELPATH=".."

# Compute WORKDIR

SCRIPT="$(readlink -f "$0")"

SCRIPT_DIR="$(dirname "$SCRIPT")"

WORK_DIR="$(readlink -f "$SCRIPT_DIR/$WORK_DIR_RELPATH")"

# Update the PATH to add the arkade bin directory

# Add the arkade binary directory to the path if missing

case ":${PATH}:" in

*:"${HOME}/.arkade/bin":*) ;;

*) export PATH="${PATH}:${HOME}/.arkade/bin" ;;

esac

# Go to the working directory

cd "$WORK_DIR" || exit 1

# Set GIT variables

if [ -z "$GIT_REPO" ]; then

export GIT_REPO="https://forgejo.mixinet.net/blogops/argocd.git"

fi

if [ -z "$GIT_TOKEN" ]; then

GIT_TOKEN="$(pass mixinet.net/argocd@forgejo/repository-write)"

export GIT_TOKEN

fi

argocd-autopilot repo bootstrap --provider giteaThe output of the execution is as follows:

❯ bin/argocd-bootstrap.sh

INFO cloning repo: https://forgejo.mixinet.net/blogops/argocd.git

INFO empty repository, initializing a new one with specified remote

INFO using revision: "", installation path: ""

INFO using context: "k3d-argocd", namespace: "argocd"

INFO applying bootstrap manifests to cluster...

namespace/argocd created

customresourcedefinition.apiextensions.k8s.io/applications.argoproj.io created

customresourcedefinition.apiextensions.k8s.io/applicationsets.argoproj.io created

customresourcedefinition.apiextensions.k8s.io/appprojects.argoproj.io created

serviceaccount/argocd-application-controller created

serviceaccount/argocd-applicationset-controller created

serviceaccount/argocd-dex-server created

serviceaccount/argocd-notifications-controller created

serviceaccount/argocd-redis created

serviceaccount/argocd-repo-server created

serviceaccount/argocd-server created

role.rbac.authorization.k8s.io/argocd-application-controller created

role.rbac.authorization.k8s.io/argocd-applicationset-controller created

role.rbac.authorization.k8s.io/argocd-dex-server created

role.rbac.authorization.k8s.io/argocd-notifications-controller created

role.rbac.authorization.k8s.io/argocd-redis created

role.rbac.authorization.k8s.io/argocd-server created

clusterrole.rbac.authorization.k8s.io/argocd-application-controller created

clusterrole.rbac.authorization.k8s.io/argocd-applicationset-controller created

clusterrole.rbac.authorization.k8s.io/argocd-server created

rolebinding.rbac.authorization.k8s.io/argocd-application-controller created

rolebinding.rbac.authorization.k8s.io/argocd-applicationset-controller created

rolebinding.rbac.authorization.k8s.io/argocd-dex-server created

rolebinding.rbac.authorization.k8s.io/argocd-notifications-controller created

rolebinding.rbac.authorization.k8s.io/argocd-redis created

rolebinding.rbac.authorization.k8s.io/argocd-server created

clusterrolebinding.rbac.authorization.k8s.io/argocd-application-controller created

clusterrolebinding.rbac.authorization.k8s.io/argocd-applicationset-controller created

clusterrolebinding.rbac.authorization.k8s.io/argocd-server created

configmap/argocd-cm created

configmap/argocd-cmd-params-cm created

configmap/argocd-gpg-keys-cm created

configmap/argocd-notifications-cm created

configmap/argocd-rbac-cm created

configmap/argocd-ssh-known-hosts-cm created

configmap/argocd-tls-certs-cm created

secret/argocd-notifications-secret created

secret/argocd-secret created

service/argocd-applicationset-controller created

service/argocd-dex-server created

service/argocd-metrics created

service/argocd-notifications-controller-metrics created

service/argocd-redis created

service/argocd-repo-server created

service/argocd-server created

service/argocd-server-metrics created

deployment.apps/argocd-applicationset-controller created

deployment.apps/argocd-dex-server created

deployment.apps/argocd-notifications-controller created

deployment.apps/argocd-redis created

deployment.apps/argocd-repo-server created

deployment.apps/argocd-server created

statefulset.apps/argocd-application-controller created

networkpolicy.networking.k8s.io/argocd-application-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-applicationset-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-dex-server-network-policy created

networkpolicy.networking.k8s.io/argocd-notifications-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-redis-network-policy created

networkpolicy.networking.k8s.io/argocd-repo-server-network-policy created

networkpolicy.networking.k8s.io/argocd-server-network-policy created

secret/autopilot-secret created

INFO pushing bootstrap manifests to repo

INFO applying argo-cd bootstrap application

INFO pushing bootstrap manifests to repo

INFO applying argo-cd bootstrap application

application.argoproj.io/autopilot-bootstrap created

INFO running argocd login to initialize argocd config

Context 'autopilot' updated

INFO argocd initialized. password: XXXXXXX-XXXXXXXX

INFO run:

kubectl port-forward -n argocd svc/argocd-server 8080:80Now we have the argocd installed and running, it can be checked using the port-forward and connecting to

https://localhost:8080/ (the certificate will be wrong, we are going to fix that in the next step).

Updating the argocd installation in git

Now that we have the application deployed we can clone the argocd repository and edit the deployment to disable TLS

for the argocd server (we are going to use TLS termination with traefik and that needs the server running as insecure,

see the Argo CD documentation)

❯ ssh clone ssh://git@forgejo.mixinet.net/blogops/argocd.git

❯ cd argocd

❯ edit bootstrap/argo-cd/kustomization.yaml

❯ git commit -m 'Disable TLS for the argocd-server'The changes made to the kustomization.yaml file are the following:

--- a/bootstrap/argo-cd/kustomization.yaml

+++ b/bootstrap/argo-cd/kustomization.yaml

@@ -11,6 +11,11 @@ configMapGenerator:

key: git_username

name: autopilot-secret

name: argocd-cm

+ # Disable TLS for the Argo Server (see https://argo-cd.readthedocs.io/en/stable/operator-manual/ingress/#traefik-v30)

+- behavior: merge

+ literals:

+ - "server.insecure=true"

+ name: argocd-cmd-params-cm

kind: Kustomization

namespace: argocd

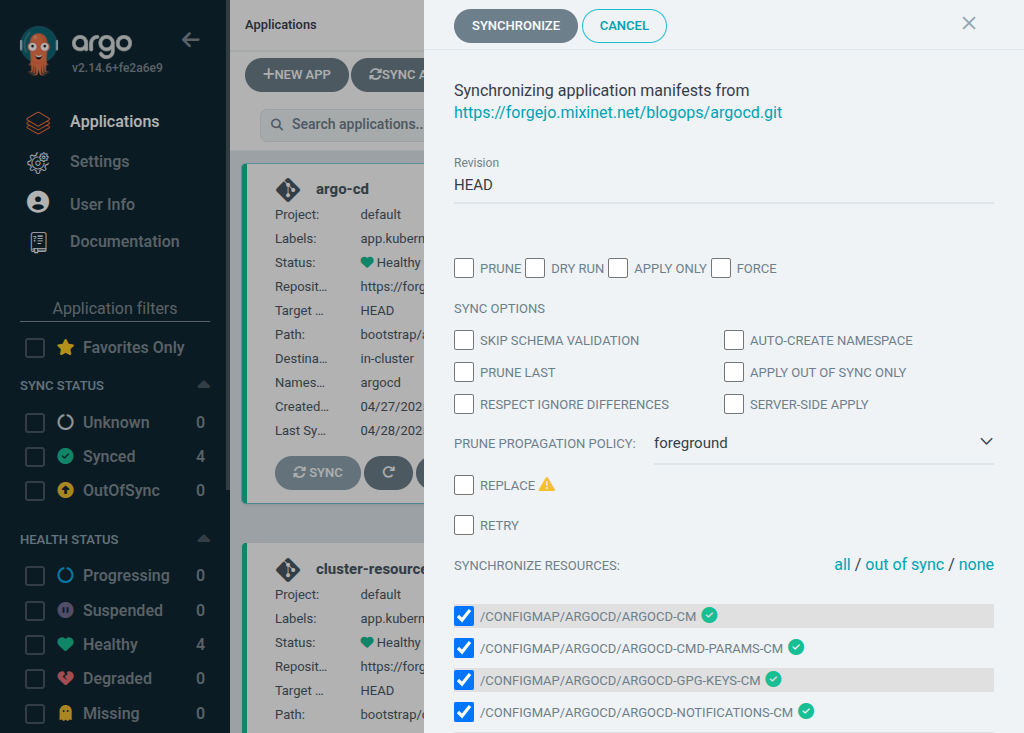

resources:Once the changes are pushed we sync the argo-cd application manually to make sure they are applied:

As a test we can download the argocd-cmd-params-cm ConfigMap to make sure everything is OK:

apiVersion: v1

data:

server.insecure: "true"

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"server.insecure":"true"},"kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/instance":"argo-cd","app.kubernetes.io/name":"argocd-cmd-params-cm","app.kubernetes.io/part-of":"argocd"},"name":"argocd-cmd-params-cm","namespace":"argocd"}}

creationTimestamp: "2025-04-27T17:31:54Z"

labels:

app.kubernetes.io/instance: argo-cd

app.kubernetes.io/name: argocd-cmd-params-cm

app.kubernetes.io/part-of: argocd

name: argocd-cmd-params-cm

namespace: argocd

resourceVersion: "16731"

uid: a460638f-1d82-47f6-982c-3017699d5f14As this simply changes the ConfigMap we have to restart the argocd-server to read it again, to do it we delete the

server pods so they are re-created using the updated resource:

❯ kubectl delete pods -n argocd -l app.kubernetes.io/name=argocd-serverAfter doing this the port-forward command is killed automatically, if we run it again the connection to get to the

argocd-server has to be done using HTTP instead of HTTPS.

Instead of testing that we are going to add an ingress definition to be able to connect to the server using HTTPS and

GRPC against the address argocd.lo.mixinet.net using the wildcard TLS certificate we installed earlier.

To do it we to edit the bootstrap/argo-cd/kustomization.yaml file to add the ingress_route.yaml file to the

deployment:

--- a/bootstrap/argo-cd/kustomization.yaml

+++ b/bootstrap/argo-cd/kustomization.yaml

@@ -20,3 +20,4 @@ kind: Kustomization

namespace: argocd

resources:

- github.com/argoproj-labs/argocd-autopilot/manifests/base?ref=v0.4.19

+- ingress_route.yamlThe ingress_route.yaml file contents are the following:

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: argocd-server

namespace: argocd

spec:

entryPoints:

- websecure

routes:

- kind: Rule

match: Host(`argocd.lo.mixinet.net`)

priority: 10

services:

- name: argocd-server

port: 80

- kind: Rule

match: Host(`argocd.lo.mixinet.net`) && Header(`Content-Type`, `application/grpc`)

priority: 11

services:

- name: argocd-server

port: 80

scheme: h2c

tls:

certResolver: defaultAfter pushing the changes and waiting a little bit the change is applied and we can access the server using HTTPS and GRPC, the first way can be tested from a browser and the GRPC using the command line interface:

❯ argocd --grpc-web login argocd.lo.mixinet.net:8443

Username: admin

Password:

'admin:login' logged in successfully

Context 'argocd.lo.mixinet.net:8443' updated

❯ argocd app list -o name

argocd/argo-cd

argocd/autopilot-bootstrap

argocd/cluster-resources-in-cluster

argocd/rootSo things are working fine … and that is all on this post, folks!